Executive Summary

Use Case: Credit Risk Scoring (Retail – Anonymized) · Segment: Unsecured Lending · Model: Gradient Boosted Trees

This sample illustrates how RiskAI generates audit-ready evidence for banking: policy→procedure→workflow mapping, model risk governance coverage, validation results, approvals, and post-deployment monitoring (with lineage and immutable audit logs).

Binder Contents

1. Model Overview & Ownership

- Business context & KPIs (PD lift, approval rate, loss rate)

- Data sources & lineage (core banking, bureaus, alt-data)

- Risk tier & intended use (origination decision support)

- RACI & roles (Owner, Validator, Approver)

2. Regulatory & Control Mapping

- EU AI Act, ISO 23894, NIST AI RMF

- Banking model risk & validation expectations (e.g., SR 11-7/ECB)

- Policy→Procedure→Workflow links & Evidence IDs

3. Validation Evidence

- Discrimination & calibration (AUC, KS, Brier)

- Fairness, stability & robustness tests

- Backtesting & challenger comparisons

4. Approvals & Sign-offs

- 1st/2nd/3rd line approvals

- Deployment gates & conditions

- Immutable audit trail & versioning

5. Monitoring & Incidents

- Drift/quality thresholds; stability index

- Threshold breach alerts & incident runbooks

- Corrective actions (CAPA) & change control

6. Appendix

- Model cards & explainability packs

- Workflow definitions

- Raw artifacts (PDF/CSV/JSON)

Model Overview & Ownership

- Owner: Head of Credit Risk Analytics

- Risk Tier: High (Credit decisioning)

- KPIs: AUC, KS, Approval Rate, NPL%

- Lineage: Core banking, bureau feeds, income verification

Regulatory & Control Mapping (Excerpt)

| Control ID | Policy → Procedure (Workflow) | Framework Mapping | Status | Evidence |

|---|---|---|---|---|

| POL-01 | Model Risk Classification → Tiering Intake (Model Register) | EU AI Act Art.6; ISO 23894 5.3; Model Risk Principles | Implemented | EVID-101 |

| POL-05 | Fairness & Data Governance → Bias Test Suite (BiasCheck Pre-Prod) | EU AI Act Art.10; NIST Measure; Data Governance | Implemented | EVID-204 |

| POL-07 | Validation & Performance → Independent Validation (ValPack) | Model Risk Validation (e.g., SR 11-7/ECB) | Implemented | EVID-290 |

| POL-09 | Human Oversight & Approvals → Approval Gate (Prod Gate) | EU AI Act Art.14; Governance & Accountability | Implemented | EVID-315 |

| POL-12 | Post-Deployment Monitoring → Monitor & Incidents (Ops Monitor) | EU AI Act Art.61; NIST Manage; Model Risk Monitoring | In Review | EVID-441 |

Note: Full binder includes sub-controls, approver roles, challenger models, and artifact references.

Validation Evidence — Fairness, Performance & Robustness

Timestamp: 2025-07-12 14:20 UTC

Result: PASS — adverse impact ratio ≥ 0.85 (threshold 0.8)

Artifacts: PDF report, JSON metrics

Approvals: 1st Line ✔ · Independent Validation ✔

EVID-204Independent Validation (Excerpt)

Discrimination: AUC 0.88; KS 0.47 (baseline AUC 0.84; KS 0.42) Calibration: Brier 0.116; calibration slope 0.98 (within tolerance) Backtest: Stability across vintages within ±3% PD bands Challenger: XGBoost challenger underperforms by −1.2% AUC

Explainability & Sensitivity (Excerpt)

Global: SHAP top features — utilization, DTI, recent delinquencies Local: LIME/SHAP available per decision (stored with decision ID) Stress: Macro shock (+150 bps) degrades approval rate −2.1%, loss rate +0.4%

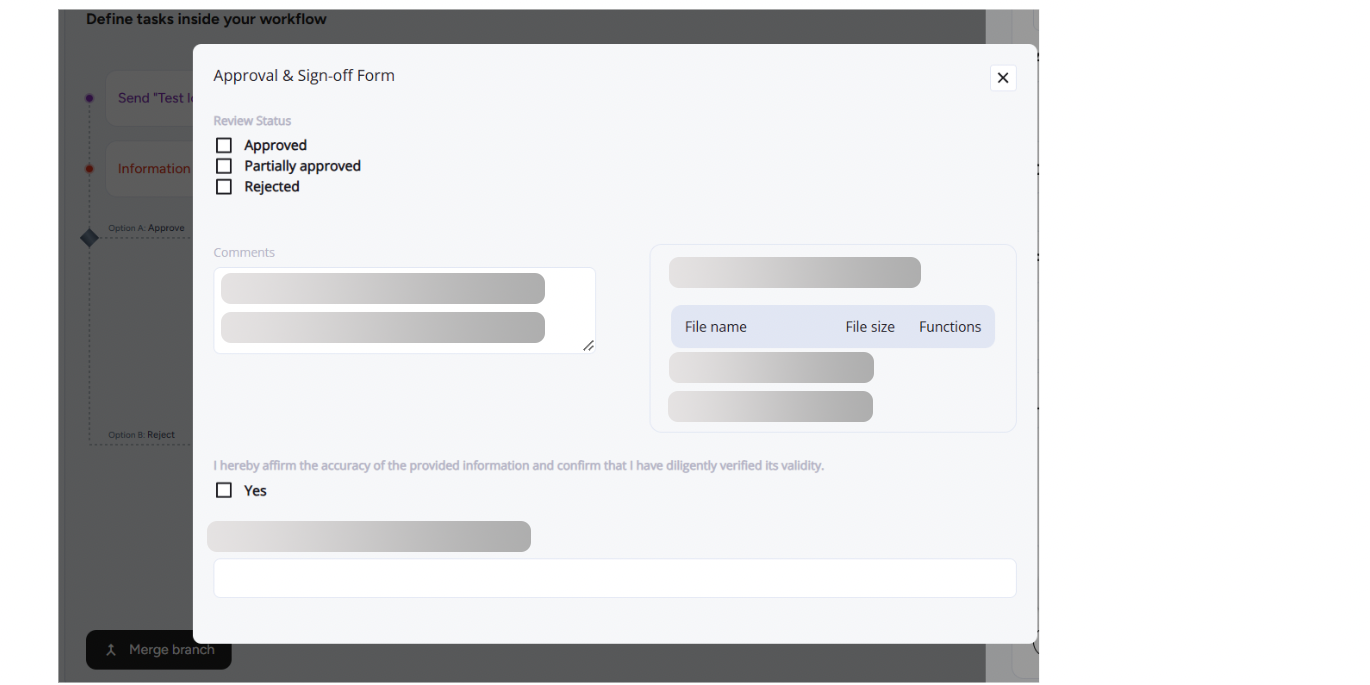

Approvals & Sign-offs

Approvers: Model Owner (1st), Model Risk/Compliance (2nd), Internal Audit (read-only)

Artifacts: Signed PDF, approval metadata (version, hash)

Audit: Immutable log entry AL-315-C

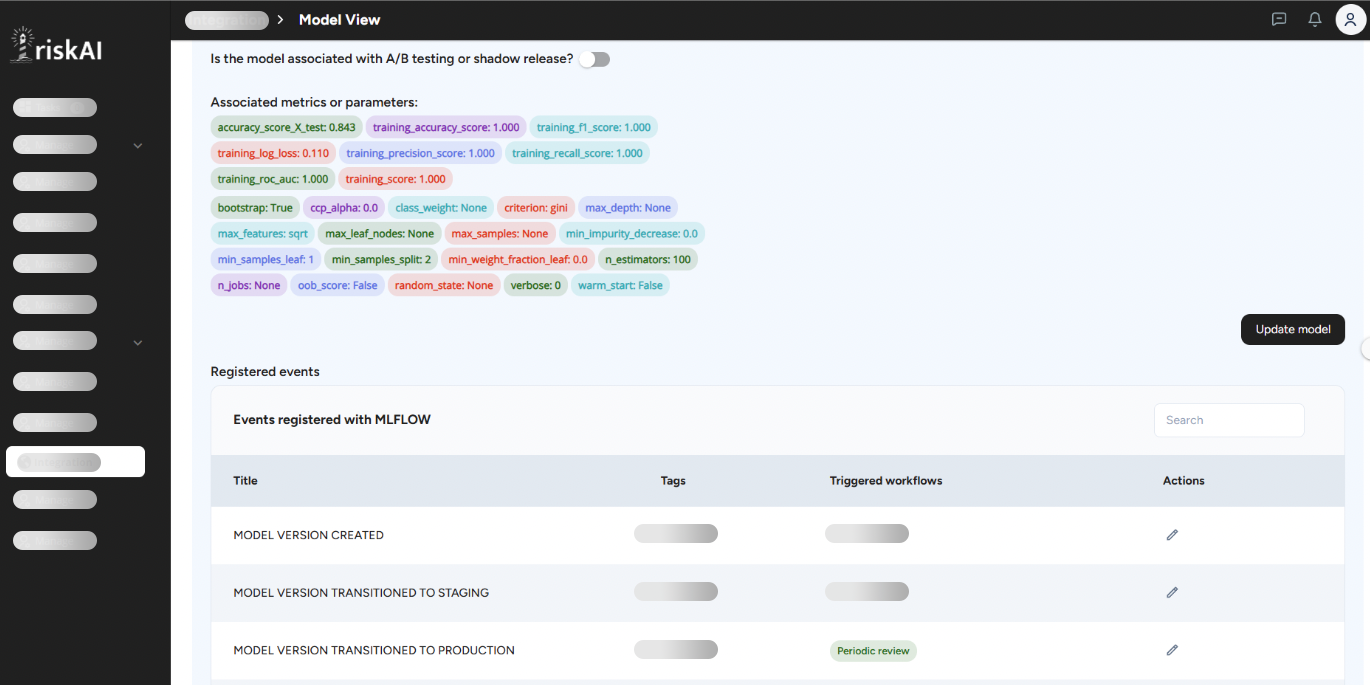

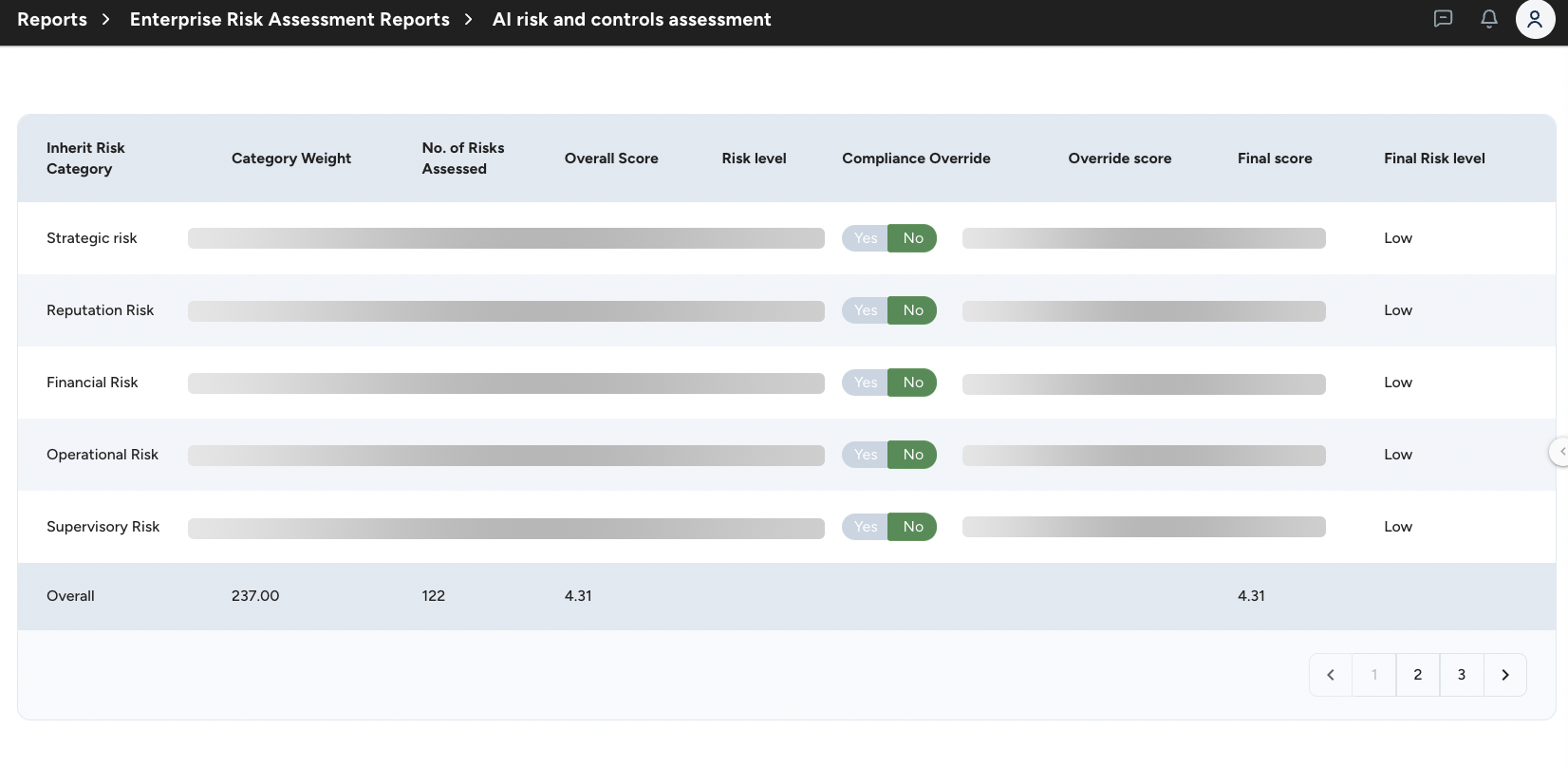

EVID-315Controls Assessment

Event: Controls Assessment Strength 4.3 out of 5

Action: No Action Required. Within Tolerance

Status: Compliant

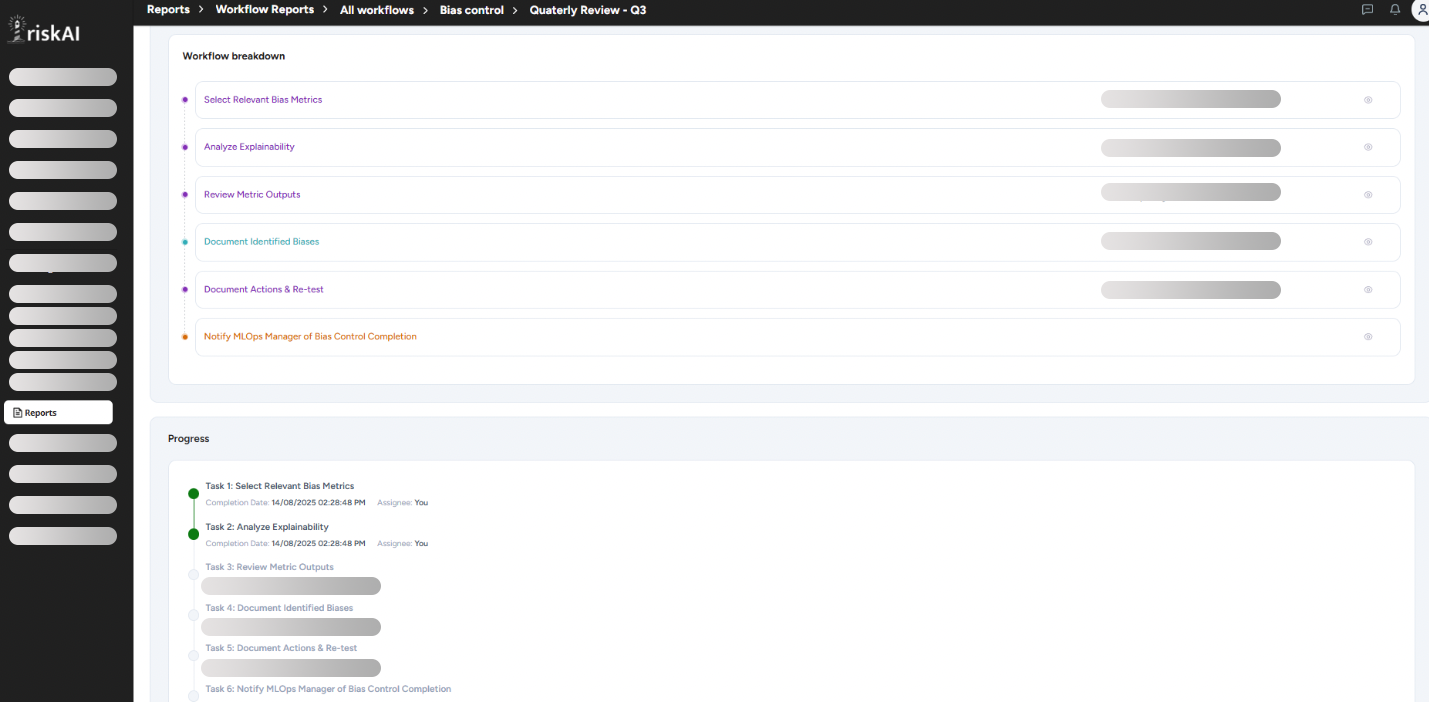

EVID-441Runbook Excerpt

Trigger: PSI > 0.25 for 2 consecutive weeks Steps: Notify Model Owner → Freeze threshold changes → RCA → Recalibration/Challenger test Records: Incident INC-441-12, CAPA CAP-441-21, Change CHG-441-05

Additional Banking Use Case Snapshots

EVID-518 — Transaction Monitoring (AML)

Scenario coverage matrix, SAR workflow linkage, precision/recall on typologies.

Workflow: AML-Ops MonitorEVID-622 — Card Fraud Model

Latency SLOs, false-positive burden, customer friction metrics, rollback plan.

Workflow: Realtime GuardAppendix — Workflow Definitions

BiasCheck — Pre-Prod

Inputs: Validation dataset, protected attributes config

Outputs: PDF, JSON metrics, approvals

Gate: Blocks deploy on fail

Independent Validation — ValPack

Scope: Performance, calibration, conceptual soundness

Outputs: Validation report, findings, remediation plan

Independence: Separate validator role enforced

Approval Gate — Prod

Approvers: 1st/2nd line; 3rd as needed

Outputs: Signed PDF, log ID

Gate: Mandatory before deploy

Monitoring — Ops

Signals: Drift (PSI/CSI), data quality, incidents

Outputs: Alerts, incident record, CAPA

SLA: Severity-based escalation

Want the Full Binder?

Get the complete, role-based binder with artifacts and read-only access for internal audit & model validation.