1) Executive Overview

Use Case: Claims Fraud Detection (Anonymized)

Business Line: P&C Vehicle Insurance

Model Type: Supervised ML (Gradient Boosting)

Purpose: Prioritize suspicious claims for human review to reduce leakage while minimizing false positives.

This sample shows how RiskAI dynamically maps use cases to the EU AI Act, ISO 23894, NIST AI RMF, and EIOPA-guided controls, auto-generating evidence (model cards, tests, approvals) and ensuring robust AI risk management.

2) Policy → Procedure → Workflow

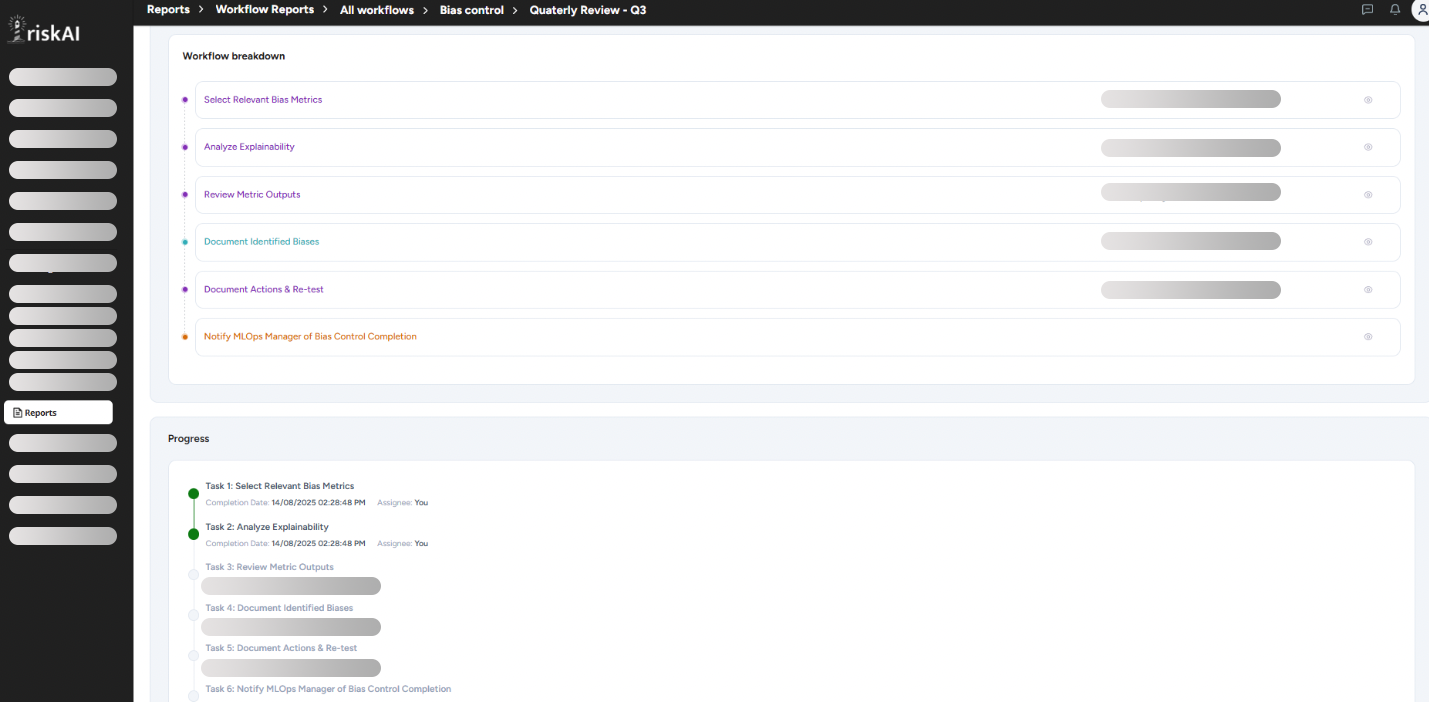

Bias & Fairness Obligations

All high-risk AI models must undergo and pass bias testing before deployment; results must be reviewed and approved by 2nd line.

POL-05Run Bias Test Suite

Execute pre-deployment bias tests using BiasCheck module; attach results to the evidence pack.

PROC-05-ABiasCheck – Pre‑Prod

- Triggered: Prior to approval gate

- Owner: Model Owner; Reviewer: Risk

- Outputs: PDF report, JSON metrics, sign‑off

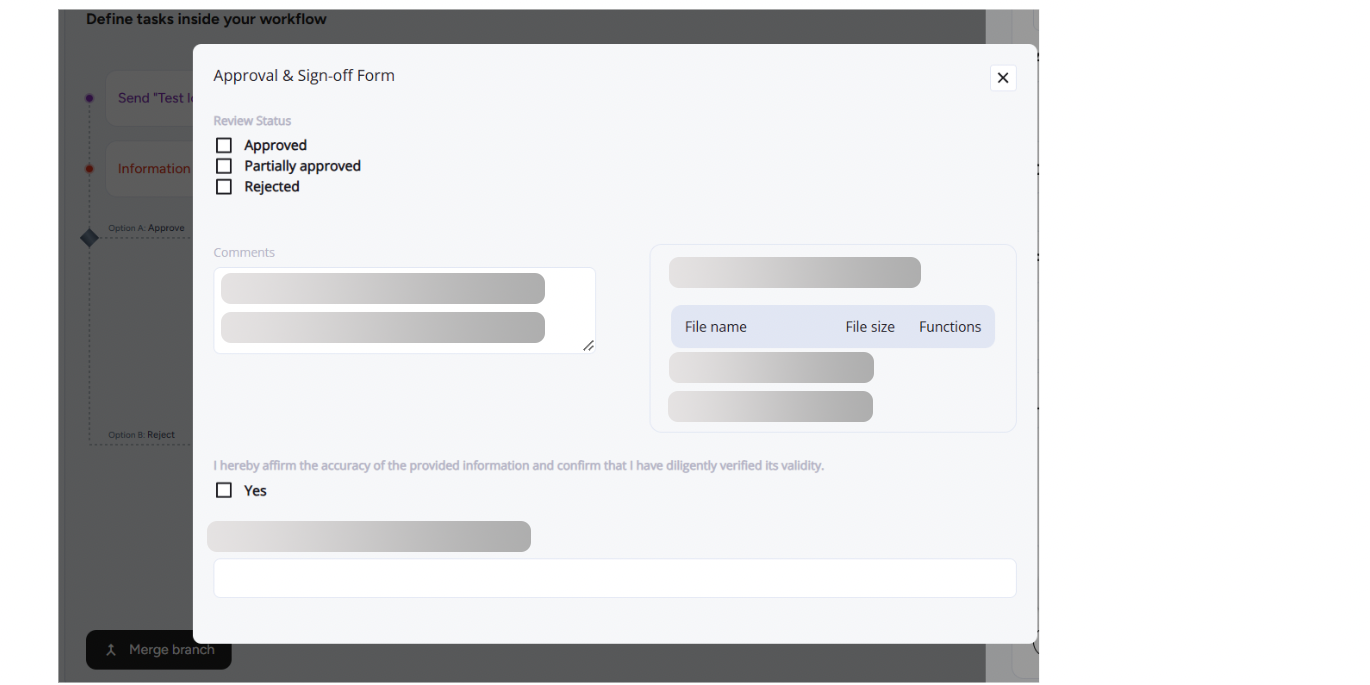

Human Oversight & Approvals

High‑value claims decisions require human approval with traceable sign‑offs across the Three Lines of Defense.

POL-09Approval Gate

Route decision for 1st/2nd line sign‑off; block deploy on missing approvals.

PROC-09-CApproval Gate – Prod

- Triggered: Pre‑production & changes

- Artifacts: e‑signatures, timestamps, audit log

3) Controls & Evidence Table

| Control ID | Policy | Procedure | Workflow | Regulatory Ref | Status | Evidence |

|---|---|---|---|---|---|---|

| POL-01 | Model Risk Classification | Model Tiering | Tiering – Intake | EU AI Act Art. 6; ISO 23894 5.3 | Implemented | EVID-101 |

| POL-05 | Bias & Fairness Obligations | Run Bias Test Suite | BiasCheck – Pre‑Prod | EU AI Act Art. 10; ISO 23894 6.2; NIST Measure | Implemented | EVID-204 |

| POL-09 | Human Oversight & Approvals | Approval Gate | Approval Gate – Prod | EU AI Act Art. 14; EIOPA oversight | Implemented | EVID-315 |

| POL-12 | Post‑Market Monitoring | Monitor Drift & Incidents | Monitoring – Ops | EU AI Act Art. 61; NIST Manage | In Review | EVID-441 |

4) Evidence Snapshots

EVID-101 — Model Register / Tiering (Intake)

Timestamp: 2025‑07‑08 10:42 UTC

User: model.owner@insurer.example

Result: Tier = High Risk (Insurance)

Artifacts: JSON intake summary, Owner sign‑off

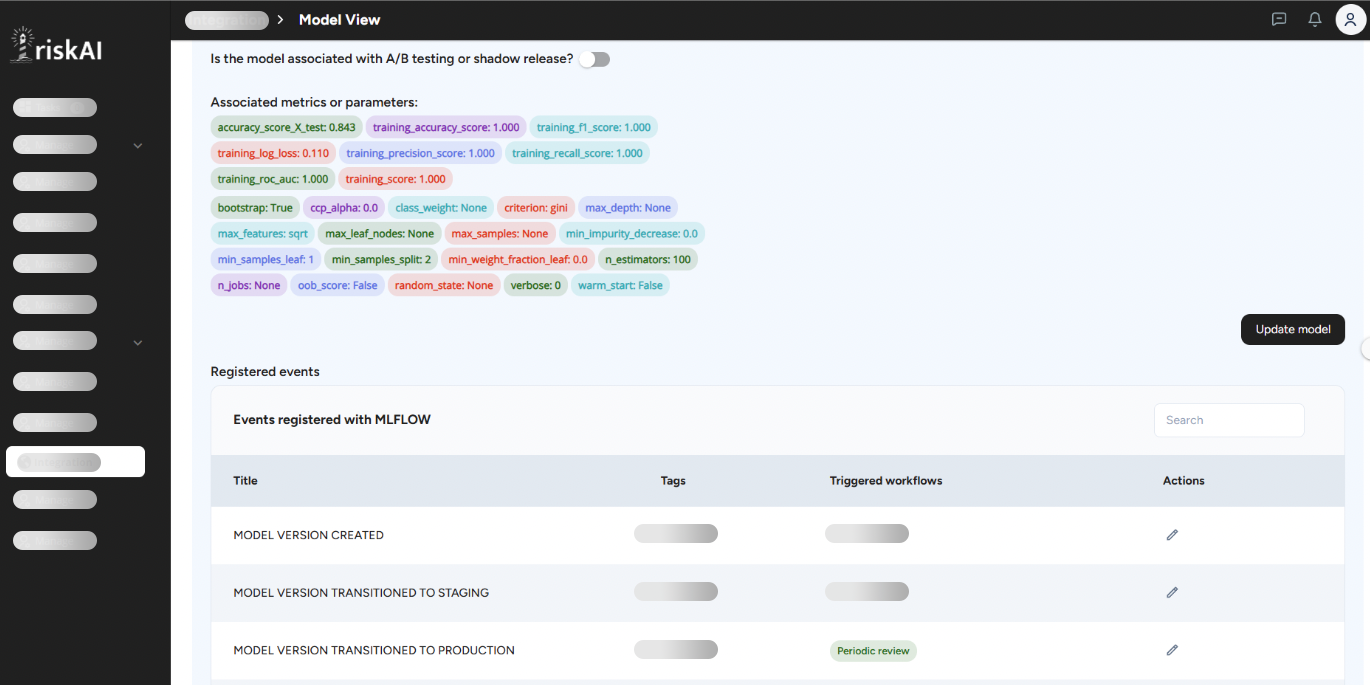

EVID-204 — BiasCheck (Pre‑Production)

Timestamp: 2025‑07‑12 14:20 UTC

User: data.scientist@insurer.example

Result: PASS — group disparity < 3% (threshold 5%)

Approvals: 1st Line ✔, 2nd Line ✔

EVID-315 — Approval Gate (Production)

Timestamp: 2025‑07‑15 09:05 UTC

Participants: Model Owner ✔, Risk ✔

Artifacts: Signed PDF, immutable audit log

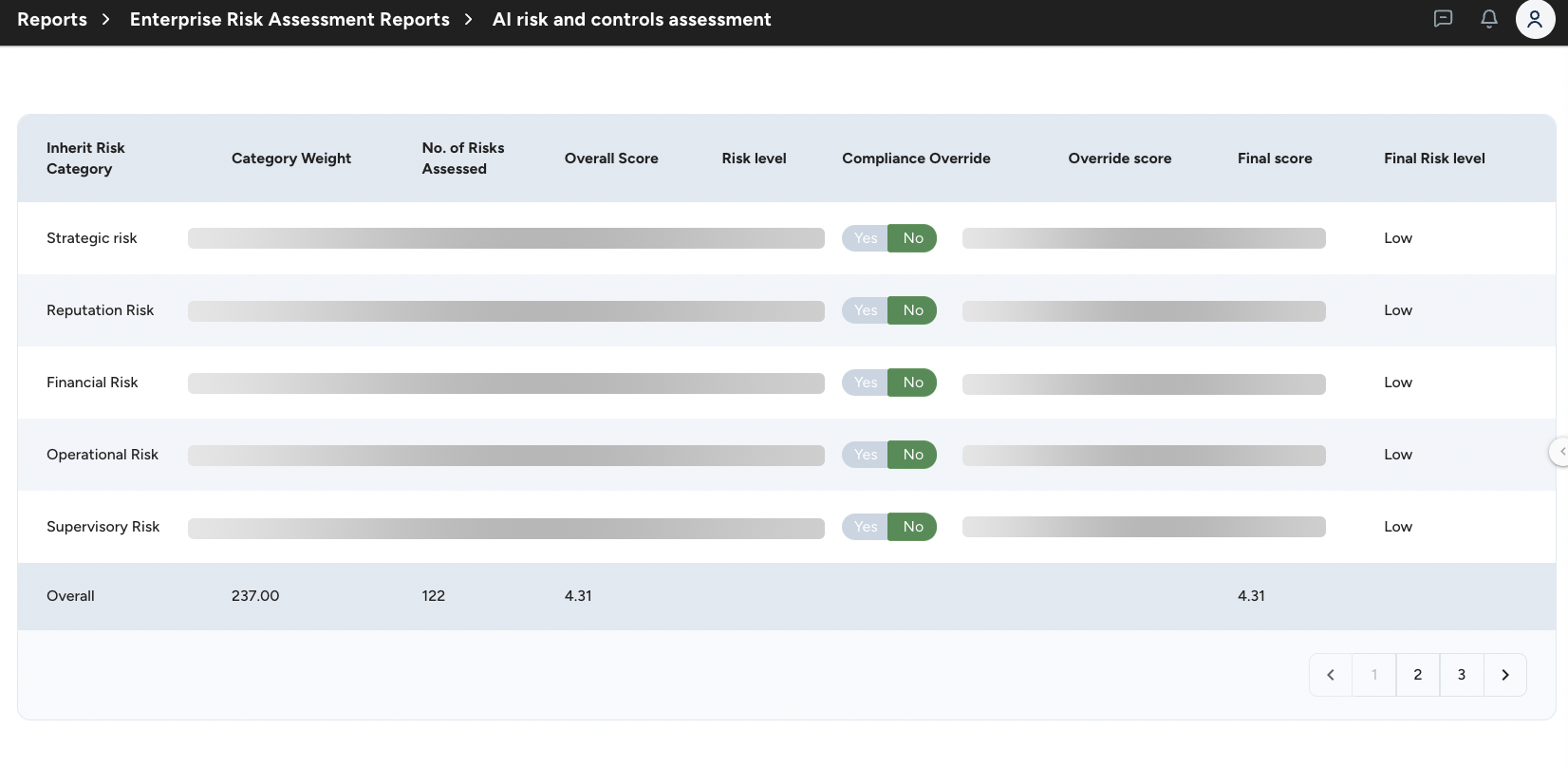

EVID-441 — Controls Assessment

Timestamp: 2025‑07‑30 18:11 UTC

Event: Data drift alert (covariate shift)

Action: Escalated per runbook; retrain request filed

5) Regulatory Mapping

Each control is mapped to obligations across frameworks. Below is an excerpt.

EU AI Act • Art. 9 Risk Management — POL-01, POL-12 • Art.10 Data & Data Governance — POL-05 • Art.14 Human Oversight — POL-09 • Art.61 Post‑Market Monitoring — POL-12 ISO 23894 • 5.3 Risk assessment — POL-01 • 6.2 Risk treatment & controls — POL-05, POL-09 NIST AI RMF • Govern — policies & roles • Measure — bias/performance testing (POL‑05) • Manage — monitoring & incidents (POL‑12) EIOPA (AI in insurance) • Trust, fairness, human oversight, documentation & accountability

Appendix: Workflow Definitions

Trigger: Prior to promotion to Pre‑Prod

Inputs: Candidate model, validation dataset, protected attributes config

Steps: Run tests → generate PDF/JSON → attach to evidence → request approvals

Outputs: Report (PDF), metrics (JSON), approvals (sign‑off), audit log ID

Trigger: Before production deploy or material change

Approvers: Model Owner (1st), Risk (2nd), Audit (3rd) as needed

Outputs: Signed PDF, approval metadata, version tag

Trigger: Scheduled jobs & realtime alerts

Signals: Drift, bias, data quality, latency, incident flags

Outputs: Alerts, incident record, corrective action plan, audit log ID