1) Executive Overview

Use Case: Credit Risk Scoring (Retail – Anonymized)

Business Line: Unsecured Lending

Model Type: Gradient Boosted Trees

Purpose: Improve approval precision and loss outcomes while maintaining fairness and explainability.

This sample shows how RiskAI maps banking use cases to the EU AI Act, ISO 23894, NIST AI RMF, and banking model-risk expectations, auto-generating model cards, validation packs, approvals, and immutable audit evidence.

2) Policy → Procedure → Workflow

Model Risk Classification

All AI models must be tiered by impact and use (e.g., credit decisioning = High) at intake.

POL-01Model Tiering

Complete intake questionnaire, compute tier score, assign owner & validator.

PROC-01-ATiering — Intake

- Trigger: Project creation

- Outputs: JSON intake summary, owner sign-off

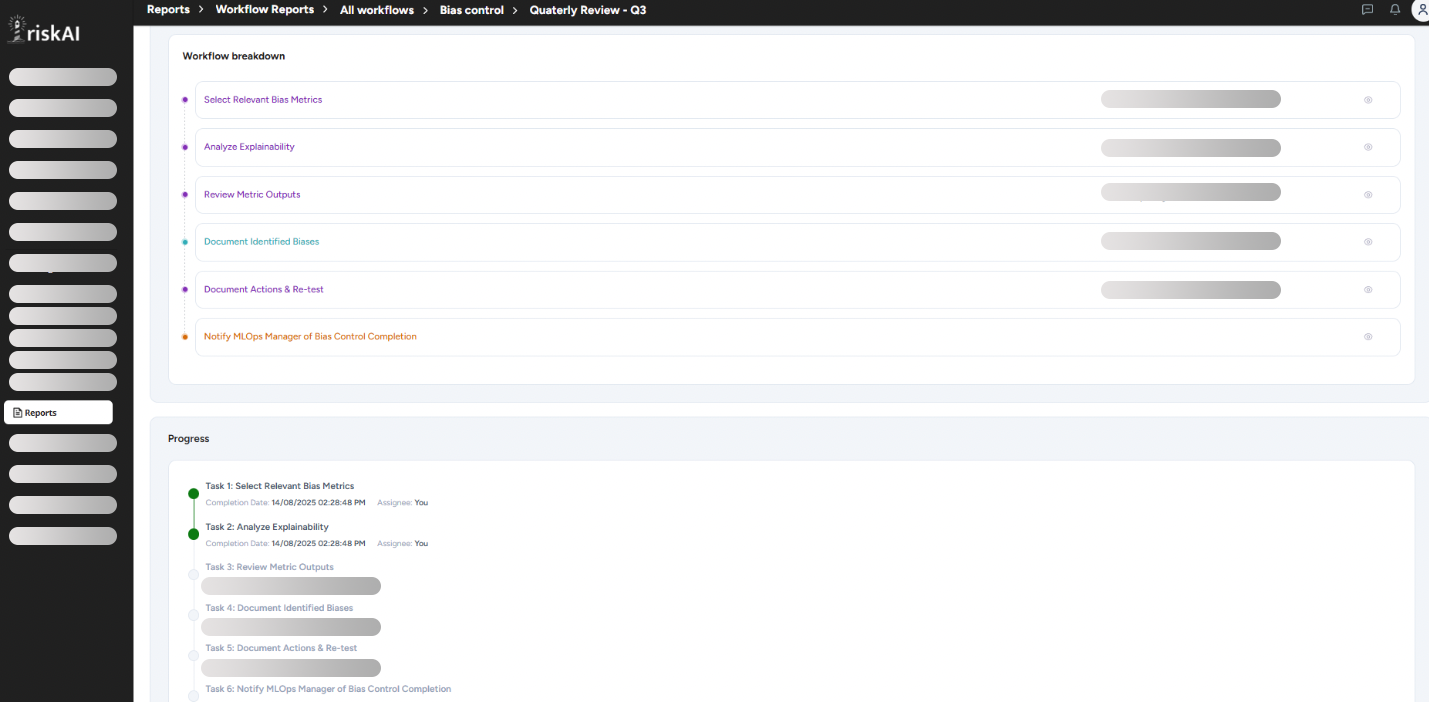

Fairness & Data Governance

High-risk models must pass fairness, data quality, and stability tests pre-deploy.

POL-05Run Bias & Stability Suite

Execute BiasCheck, stability and data-quality tests; archive outputs.

PROC-05-ABiasCheck — Pre-Prod

- Trigger: Before validation gate

- Outputs: PDF report, JSON metrics, approvals

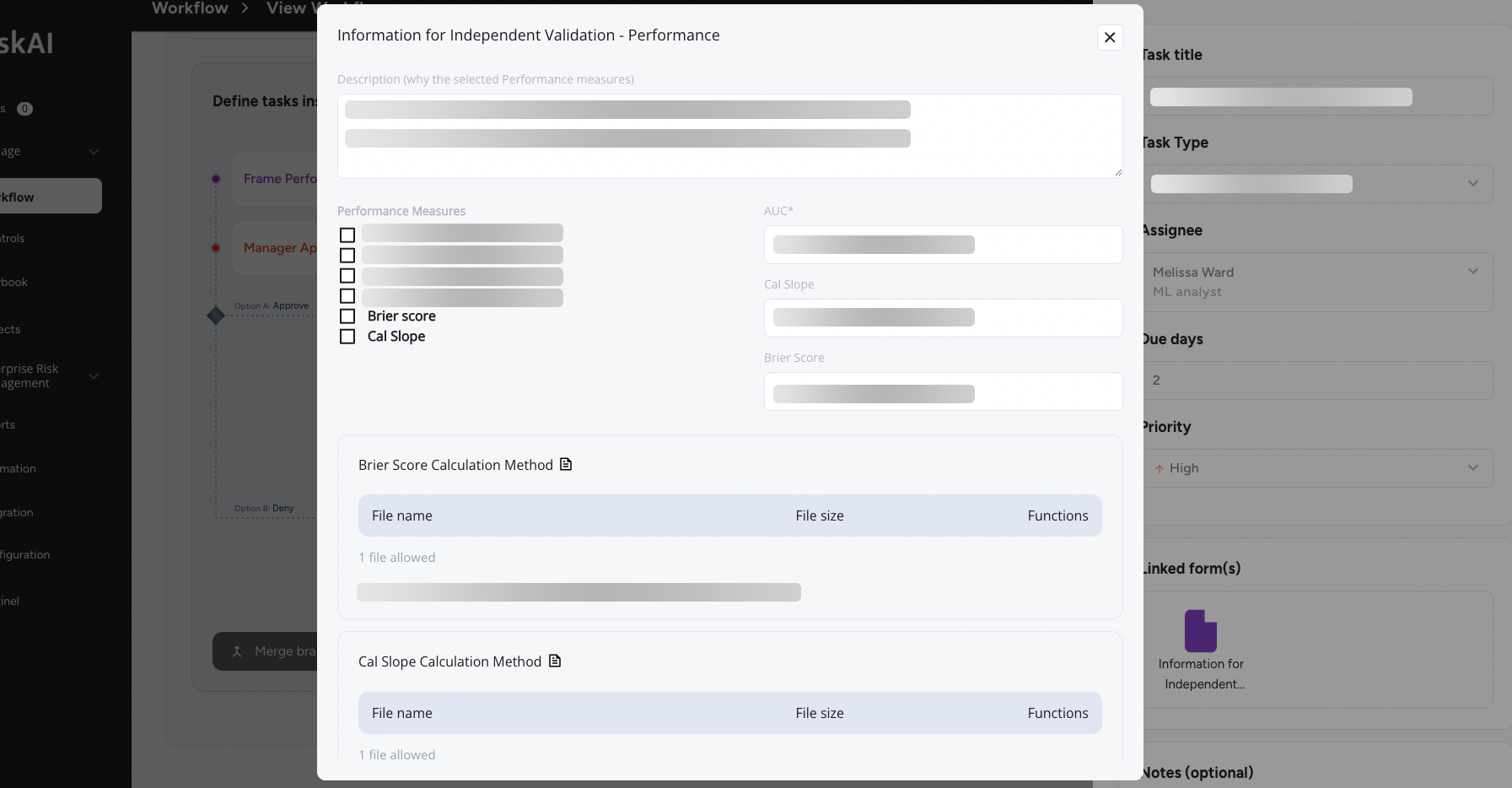

Independent Validation

All high-risk credit models require independent validation prior to go-live.

POL-07Validation Package

Complete performance, calibration, conceptual soundness review.

PROC-07-BValPack — Independent

- Trigger: After BiasCheck

- Outputs: PDF validation, findings, remediation plan

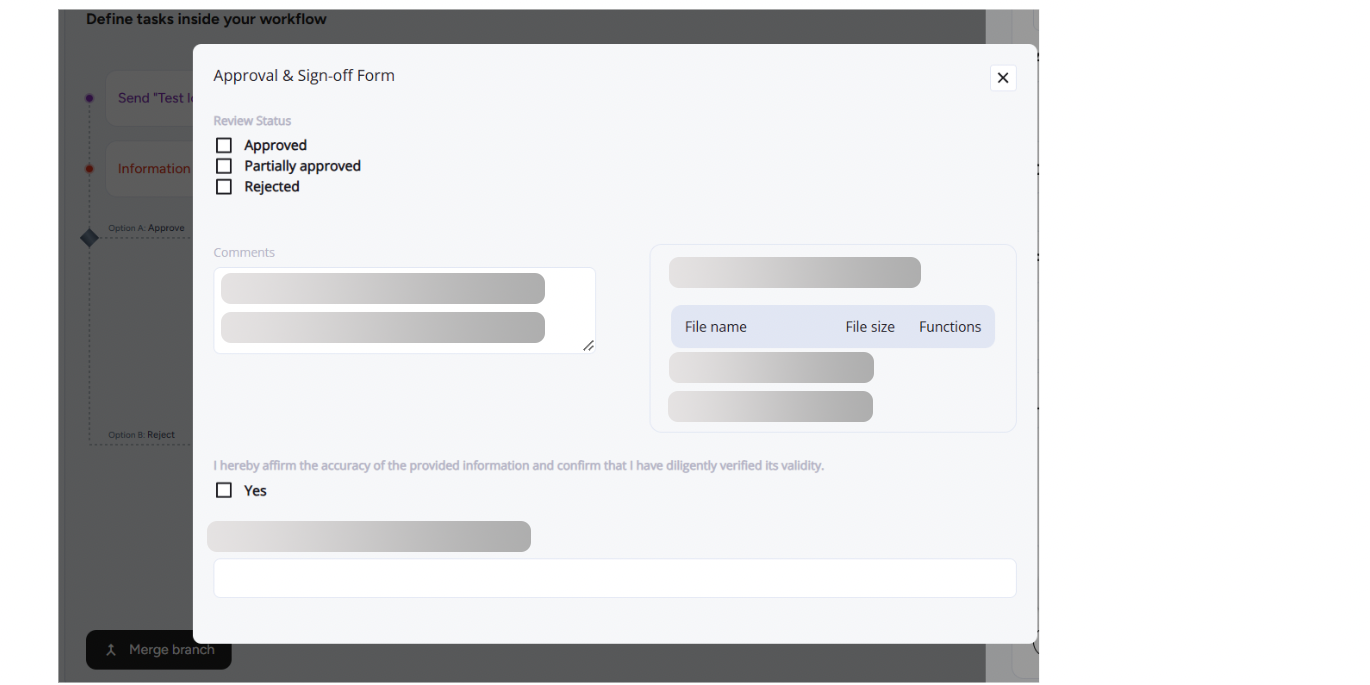

Human Oversight & Approvals

Production deployment requires gated approvals across 1st/2nd line.

POL-09Approval Gate

Route to approvers; block deployment if any sign-off is missing.

PROC-09-CApproval Gate — Prod

- Trigger: Pre-production & material change

- Artifacts: e-signatures, timestamps, audit log

3) Controls & Evidence Table

| Control ID | Policy | Procedure | Workflow | Regulatory Ref | Status | Evidence |

|---|---|---|---|---|---|---|

| POL-01 | Model Risk Classification | Model Tiering | Tiering — Intake | EU AI Act Art.6; ISO 23894 5.3 | Implemented | EVID-101 |

| POL-05 | Fairness & Data Governance | Bias & Stability Test Suite | BiasCheck — Pre-Prod | EU AI Act Art.10; NIST Measure | Implemented | EVID-204 |

| POL-07 | Independent Validation | Validation Package | ValPack — Independent | Model Risk (e.g., SR 11-7 / ECB) | Implemented | EVID-290 |

| POL-09 | Human Oversight & Approvals | Approval Gate | Approval Gate — Prod | EU AI Act Art.14; Governance | Implemented | EVID-315 |

| POL-12 | Post-Market Monitoring | Monitor Drift & Incidents | Ops Monitoring | EU AI Act Art.61; NIST Manage | In Review | EVID-441 |

4) Evidence Snapshots

EVID-101 — Model Register / Tiering (Intake)

Timestamp: 2025-07-08 10:42 UTC

User: model.owner@bank.example

Result: Tier = High (Credit decisioning)

Artifacts: JSON intake summary, Owner sign-off

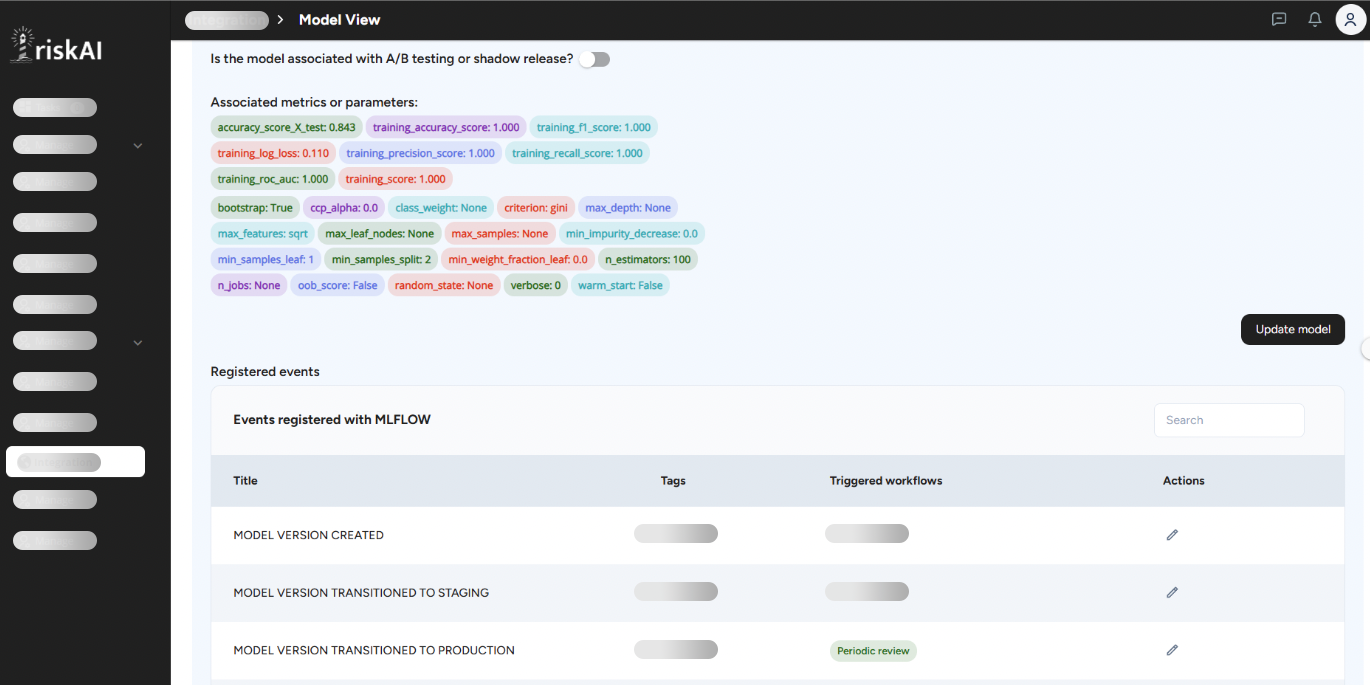

EVID-204 — Bias & Stability (Pre-Prod)

Timestamp: 2025-07-12 14:20 UTC

Result: PASS — AIR ≥ 0.85; Stability within thresholds

Approvals: 1st Line ✔, Validator ✔

EVID-290 — Independent Validation Pack

Metrics: AUC 0.88; KS 0.47; Brier 0.116; Cal slope 0.98

Challenger: XGB −1.2% AUC

Outcome: Approved with minor remediation

EVID-315 — Approval Gate (Production)

Participants: Model Owner ✔, Model Risk ✔

Artifacts: Signed PDF, version hash, audit log

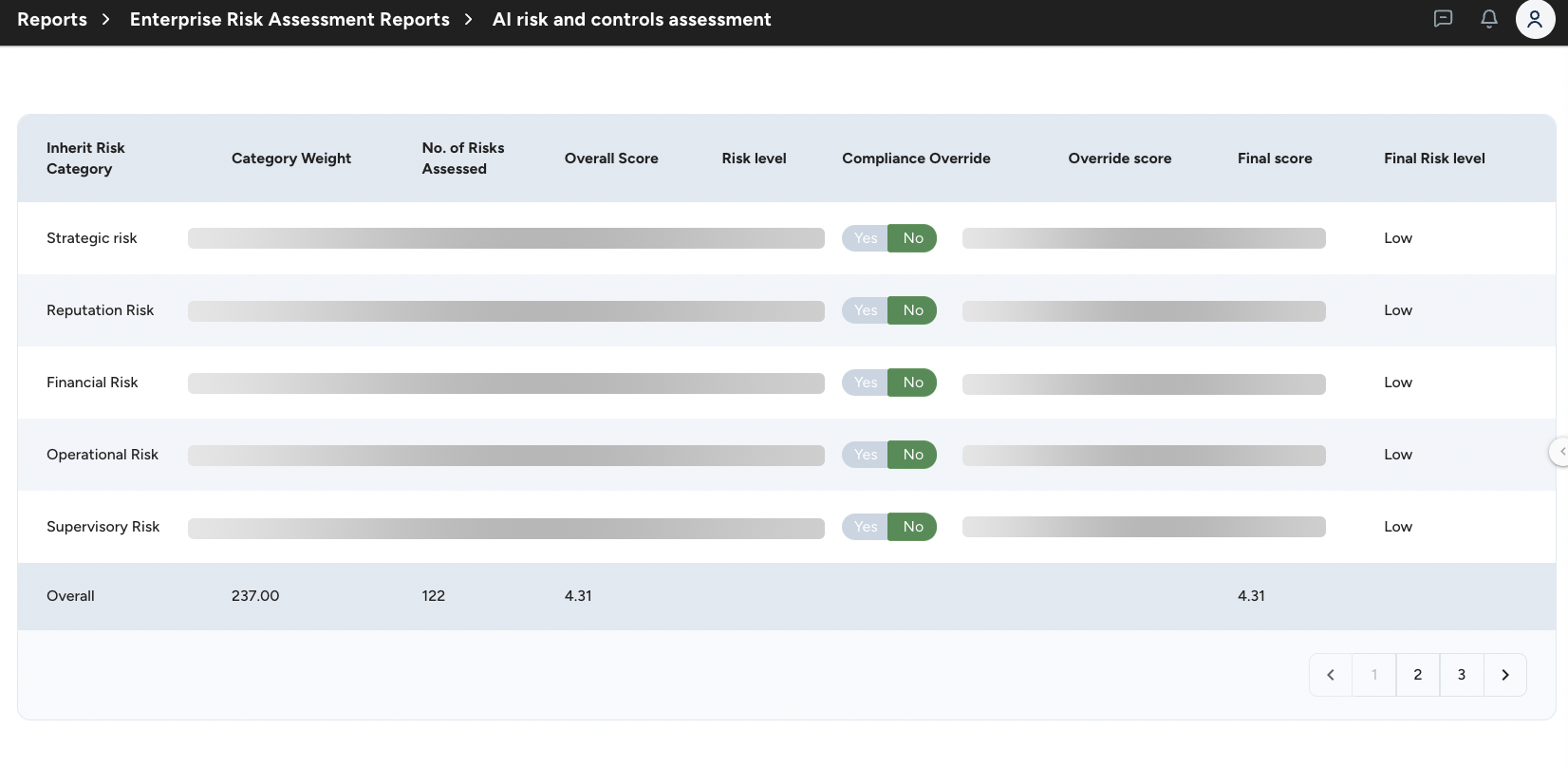

EVID-441 — Controls Assessment

Event: Controls Assessment Strength 4.3 out of 5

Action: No Action Required. Within Tolerance

Status: Compliant

5) Regulatory Mapping

EU AI Act • Art. 6 Risk Tiering — POL-01 • Art.10 Data & Governance — POL-05 • Art.14 Human Oversight — POL-09 • Art.61 Post-Market Monitoring — POL-12 ISO 23894 • 5.3 Risk assessment — Tiering & intake (POL-01) • 6.2 Risk treatment & controls — Bias/validation (POL-05, POL-07) • 6.3 Monitoring & review — Ops monitoring (POL-12) NIST AI RMF • Govern — Roles, policies, approvals (POL-01, POL-09) • Measure — Fairness, robustness, performance (POL-05, POL-07) • Manage — Alerts, incidents, CAPA (POL-12) Banking Model Risk (e.g., SR 11-7 / ECB) • Model inventory & classification — POL-01 • Independent validation & findings — POL-07 • Ongoing monitoring & change control — POL-12, POL-09

Appendix: Workflow Definitions

Trigger: Prior to validation gate

Inputs: Candidate model, validation dataset, protected attributes config

Steps: Run tests → generate PDF/JSON → attach to evidence → request approvals

Outputs: Report (PDF), metrics (JSON), approvals, audit log ID

Scope: Discrimination & calibration, conceptual soundness, backtesting, challenger

Independence: Separate validator role enforced by RBAC

Outputs: Validation PDF, findings & remediation plan, approval notes

Trigger: Before production deploy or material change

Approvers: Model Owner (1st), Model Risk/Compliance (2nd)

Outputs: Signed PDF, version hash, immutable log entry

Trigger: Scheduled jobs & realtime alerts

Signals: Drift (PSI/CSI), data quality, latency, rejection rate shifts, incidents

Outputs: Alerts, incident record, CAPA, change ticket, audit log ID